Introduction

zarrs is a Rust library for the Zarr V2 and Zarr V3 array storage formats.

If you don’t know what Zarr is, check out:

- the official Zarr website: zarr.dev, and

- the Zarr V3 specification.

zarrs was originally designed exclusively as a Rust library for Zarr V3.

However, it now supports a V3 compatible subset of Zarr V2, and has Python and C/C++ bindings.

This book details the Rust implementation.

🚀 zarrs is Fast 🚀

The

zarrs against other Zarr V3 implementations.

Check out the benchmarks below that measure the time to round trip a \(1024x2048x2048\) uint16 array encoded in various ways.

The zarr_benchmarks repository includes additional benchmarks.

Python Bindings: zarrs-python

zarrs-python exposes a high-performance zarrs-backed codec pipeline to the reference

from zarr import config

import zarrs # noqa: F401

config.set({"codec_pipeline.path": "zarrs.ZarrsCodecPipeline"})

That’s it!

There is no need to learn a new API and it is supported by downstream libraries like dask.

However, zarrs-python has some limitations.

Consult the zarrs-python README or PyPi docs for more details.

Rust Crates

The Zarr specification is inherently unstable. It is under active development and new extensions are regularly being introduced.

The zarrs crate has been split into multiple crates to:

- allow external implementations of stores and extensions points to target a relatively stable API compatible with a range of

zarrsversions, - enable automatic backporting of metadata compatibility fixes and changes due to standardisation,

- stay up-to-date with unstable public dependencies (e.g.

opendal,object_store,icechunk, etc) without impacting the release cycle ofzarrs, and - improve compilation times.

Below is an overview of the crate structure:

The core crate is:

For local filesystem stores (referred to as native Zarr), this is the only crate you need to depend on.

zarrs has quite a few supplementary crates:

Tip

The supplementary crates are transitive dependencies of

zarrs, and are re-exported in the crate root. You do not need to add them as direct dependencies.

Note

The supplementary crates are separated from

zarrsto enable development of Zarr extensions and stores targeting a more stable API thanzarrsitself.

Additional crates need to be added as dependencies in order to use:

- remote stores (e.g. HTTP, S3, GCP, etc.),

zipstores, oricechunktransactional storage.

The Stores chapter details the various types of stores and their associated crates.

C/C++ Bindings: zarrs_ffi

A subset of zarrs exposed as a C/C++ API.

zarrs_ffi is a single header library: zarrs.h.

Consult the zarrs_ffi README and API docs for more information.

CLI Tools: zarrs_tools

Various tools for creating and manipulating Zarr v3 data with the zarrs rust crate.

This crate is detailed in the zarrs_tools chapter.

Zarr Metadata Conventions

ome_zarr_metadata

A Rust library for OME-Zarr (previously OME-NGFF) metadata.

OME-Zarr, formerly known as OME-NGFF (Open Microscopy Environment Next Generation File Format), is a specification designed to support modern scientific imaging needs. It is widely used in microscopy, bioimaging, and other scientific fields requiring high-dimensional data management, visualisation, and analysis.

Installation

Prerequisites

The most recent zarrs requires Rust version

You can check your current Rust version by running:

rustc --version

If you don’t have Rust installed, follow the official Rust installation guide.

Some optional zarrs codecs require:

These are typically available through package managers on Linux, Homebrew on Mac, etc.

Adding zarrs to Your Rust Library/Application

zarrs is a Rust library.

To use it as a dependency in your Rust project, add it to your Cargo.toml file:

[dependencies]

zarrs = "18.0" # Replace with the latest version

The latest version is

Crate Features

zarrs has a number of features for stores, codecs, or APIs, many of which are enabled by default.

The below example demonstrates how to disable default features and explicitly enable required features:

[dependencies.zarrs]

version = "18.0"

default-features = false

features = ["filesystem", "blosc"]

See zarrs (docs.rs) - Crate Features for an up-to-date list of all available features.

Supplementary Crates

Remote store support and other capabilities are provided by supplementary crates. See the Rust Crates section and Stores chapter for an overview of the crates available.

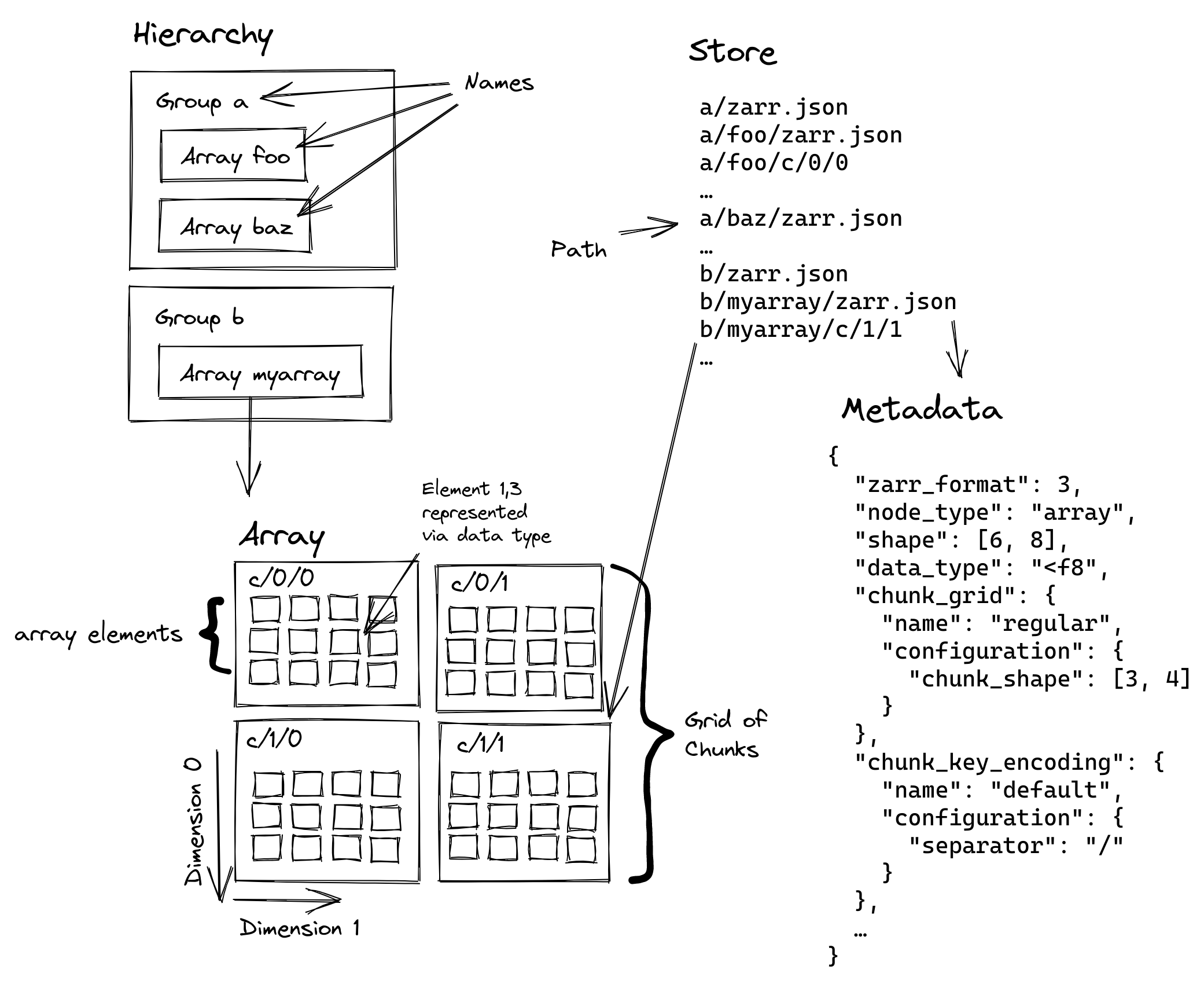

Zarr Stores

A Zarr store is a system that can be used to store and retrieve data from a Zarr hierarchy. For example: a filesystem, HTTP server, FTP server, Amazon S3 bucket, etc. A store implements a key/value store interface for storing, retrieving, listing, and erasing keys.

The Zarr V3 storage API is detailed here in the Zarr V3 specification.

The Sync and Async API

Zarr Groups and Arrays are the core components of a Zarr hierarchy.

In zarrs, both structures have both a synchronous and asynchronous API.

The applicable API depends on the storage that the group or array is created with.

Async API methods typically have an async_ prefix.

In subsequent chapters, async API method calls are shown commented out below their sync equivalent.

Warning

The async API is still considered experimental, and it requires the

asyncfeature.

Synchronous Stores

Memory

MemoryStore is a synchronous in-memory store available in the zarrs_storage crate (re-exported as zarrs::storage).

#![allow(unused)]

fn main() {

extern crate zarrs;

use std::sync::Arc;

use zarrs::storage::ReadableWritableListableStorage;

use zarrs::storage::store::MemoryStore;

let store: ReadableWritableListableStorage = Arc::new(MemoryStore::new());

}Note that in-memory stores do not persist data, and they are not suited to distributed (i.e. multi-process) usage.

Filesystem

FilesystemStore is a synchronous filesystem store available in the zarrs_filesystem crate (re-exported as zarrs::filesystem with the filesystem feature).

#![allow(unused)]

fn main() {

extern crate zarrs;

use std::sync::Arc;

use zarrs::storage::ReadableWritableListableStorage;

use zarrs::filesystem::FilesystemStore;

let base_path = "/";

let store: ReadableWritableListableStorage =

Arc::new(FilesystemStore::new(base_path)?);

Ok::<(), Box<dyn std::error::Error>>(())

}The base path is the root of the filesystem store. Node paths are relative to the base path.

The filesystem store also has a new_with_options constructor.

Currently the only option available for filesystem stores is whether or not to enable direct I/O on Linux.

HTTP

HTTPStore is a read-only synchronous HTTP store available in the zarrs_http crate.

#![allow(unused)]

fn main() {

extern crate zarrs;

extern crate zarrs_http;

use std::sync::Arc;

use zarrs::storage::ReadableStorage;

use zarrs_http::HTTPStore;

let http_store: ReadableStorage = Arc::new(HTTPStore::new("http://...")?);

Ok::<(), Box<dyn std::error::Error>>(())

}Note

The HTTP stores provided by

object_storeandopendal(see below) provide a more comprehensive feature set.

Asynchronous Stores

object_store

The object_store crate is an async object store library for interacting with object stores.

Supported object stores include:

- AWS S3

- Azure Blob Storage

- Google Cloud Storage

- Local files

- Memory

- HTTP/WebDAV Storage

- Custom implementations

zarrs_object_store::AsyncObjectStore wraps object_store::ObjectStore stores.

#![allow(unused)]

fn main() {

extern crate zarrs;

extern crate zarrs_object_store;

extern crate object_store;

use std::sync::Arc;

use zarrs::storage::AsyncReadableStorage;

use zarrs_object_store::AsyncObjectStore;

let options = object_store::ClientOptions::new().with_allow_http(true);

let store = object_store::http::HttpBuilder::new()

.with_url("http://...")

.with_client_options(options)

.build()?;

let store: AsyncReadableStorage = Arc::new(AsyncObjectStore::new(store));

Ok::<(), Box<dyn std::error::Error>>(())

}OpenDAL

The opendal crate offers a unified data access layer, empowering users to seamlessly and efficiently retrieve data from diverse storage services.

It supports a huge range of services and layers to extend their behaviour.

zarrs_object_store::AsyncOpendalStore wraps opendal::Operator.

#![allow(unused)]

fn main() {

extern crate zarrs;

extern crate zarrs_opendal;

extern crate opendal;

use std::sync::Arc;

use zarrs::storage::AsyncReadableStorage;

use zarrs_opendal::AsyncOpendalStore;

let builder = opendal::services::Http::default().endpoint("http://...");

let operator = opendal::Operator::new(builder)?.finish();

let store: AsyncReadableStorage =

Arc::new(AsyncOpendalStore::new(operator));

Ok::<(), Box<dyn std::error::Error>>(())

}Note

Some

opendalstores can also be used in a synchronous context withzarrs_object_store::OpendalStore, which wrapsopendal::BlockingOperator.

Icechunk

icechunk is a transactional storage engine for Zarr designed for use on cloud object storage.

It enables git-like functionality for array data.

See an up-to-date example at https://github.com/zarrs/zarrs_icechunk.

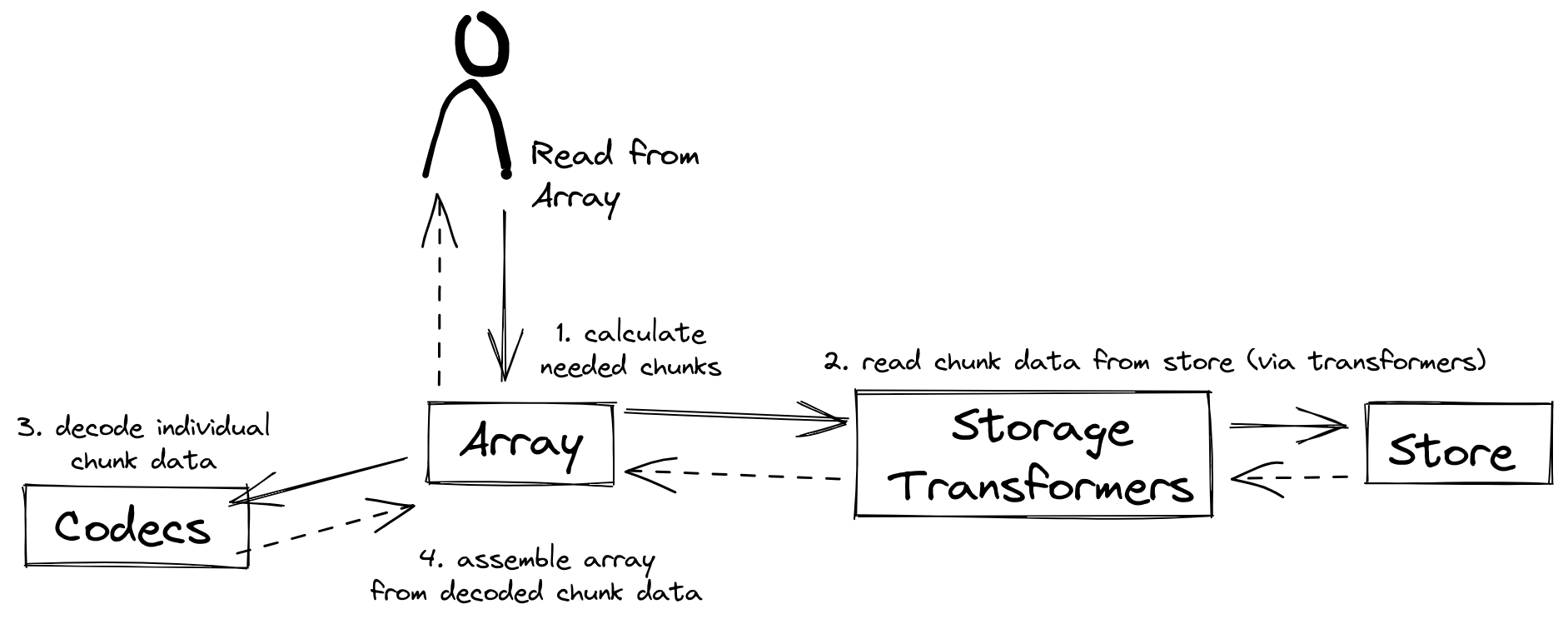

Storage Adapters

Storage adapters can be layered on top of stores to change their functionality.

Zip

A storage adapter for zip files.

#![allow(unused)]

fn main() {

extern crate zarrs_storage;

extern crate zarrs_filesystem;

extern crate zarrs_zip;

use std::sync::Arc;

use std::path::PathBuf;

use zarrs_storage::StoreKey;

use zarrs_filesystem::FilesystemStore;

use zarrs_zip::ZipStorageAdapter;

let fs_root = PathBuf::from("/path/to/a/directory");

let fs_store = Arc::new(FilesystemStore::new(&fs_root)?);

let zip_key = StoreKey::new("zarr.zip")?;

let zip_store = Arc::new(ZipStorageAdapter::new(fs_store, zip_key)?);

// or ZipStorageAdapter::new_with_path

Ok::<(), Box<dyn std::error::Error>>(())

}Async to Sync

Asynchronous stores can be used in a synchronous context with the zarrs::storage::AsyncToSyncStorageAdapter.

The AsyncToSyncBlockOn trait must be implemented for a runtime or runtime handle in order to block on futures.

See the below tokio example:

#![allow(unused)]

fn main() {

extern crate zarrs;

use zarrs::storage::storage_adapter::async_to_sync::AsyncToSyncBlockOn;

struct TokioBlockOn(tokio::runtime::Runtime); // or handle

impl AsyncToSyncBlockOn for TokioBlockOn {

fn block_on<F: core::future::Future>(&self, future: F) -> F::Output {

self.0.block_on(future)

}

}

}#![allow(unused)]

fn main() {

extern crate zarrs;

extern crate zarrs_opendal;

struct TokioBlockOn(tokio::runtime::Runtime); // or handle

impl AsyncToSyncBlockOn for TokioBlockOn {

fn block_on<F: core::future::Future>(&self, future: F) -> F::Output {

self.0.block_on(future)

}

}

use zarrs::storage::storage_adapter::async_to_sync::AsyncToSyncBlockOn;

use zarrs::storage::storage_adapter::async_to_sync::AsyncToSyncStorageAdapter;

use std::sync::Arc;

use zarrs::storage::{AsyncReadableStorage, ReadableStorage};

// Create an async store as normal

let path = "http://...";

let builder = opendal::services::Http::default().endpoint(path);

let operator = opendal::Operator::new(builder)?.finish();

let storage: AsyncReadableStorage =

Arc::new(zarrs_opendal::AsyncOpendalStore::new(operator));

// Create a tokio runtime and adapt the store to sync

let block_on = TokioBlockOn(tokio::runtime::Runtime::new()?);

let store: ReadableStorage =

Arc::new(AsyncToSyncStorageAdapter::new(storage, block_on));

Ok::<_, Box<dyn std::error::Error>>(())

}Warning

Many async stores are not runtime-agnostic (i.e. only support

tokio).

Usage Log

The zarrs::storage::UsageLogStorageAdapter logs storage method calls.

It is intended to aid in debugging and optimising performance by revealing storage access patterns.

#![allow(unused)]

fn main() {

extern crate zarrs;

extern crate chrono;

use std::sync::Arc;

use std::sync::Mutex;

use zarrs::storage::store::MemoryStore;

use zarrs::storage::storage_adapter::usage_log::UsageLogStorageAdapter;

let store = Arc::new(MemoryStore::new());

let log_writer = Arc::new(Mutex::new(

// std::io::BufWriter::new(

std::io::stdout(),

// )

));

let store = Arc::new(UsageLogStorageAdapter::new(store, log_writer, || {

chrono::Utc::now().format("[%T%.3f] ").to_string()

}));

}Performance Metrics

The zarrs::storage::PerformanceMetricsStorageAdapter accumulates metrics, such as bytes read and written.

It is intended to aid in testing by allowing the application to validate that metrics (e.g., bytes read/written, total read/write operations) match expected values for specific operations.

#![allow(unused)]

fn main() {

extern crate zarrs;

use std::sync::Arc;

use zarrs::storage::store::MemoryStore;

use zarrs::storage::storage_adapter::performance_metrics::PerformanceMetricsStorageAdapter;

let store = Arc::new(MemoryStore::new());

let store = Arc::new(PerformanceMetricsStorageAdapter::new(store));

// assert_eq!(store.bytes_read(), ...);

}Zarr Groups

A group is a node in a Zarr hierarchy that may have child nodes (arrays or groups).

Each array or group in a hierarchy is represented by a metadata document, which is a machine-readable document containing essential processing information about the node. For a group, the metadata document contains the Zarr Version and optional user attributes.

Opening an Existing Group

An existing group can be opened with Group::open (or async_open):

#![allow(unused)]

fn main() {

extern crate zarrs;

use zarrs::group::Group;

let store = std::sync::Arc::new(zarrs::storage::store::MemoryStore::new());

let group = zarrs::group::GroupBuilder::new().build(store.clone(), "/group")?;

group.store_metadata()?;

let group = Group::open(store.clone(), "/group")?;

// let group = Group::async_open(store.clone(), "/group").await?;

Ok::<_, Box<dyn std::error::Error>>(())

}Note

These methods will open a Zarr V2 or Zarr V3 group. If you only want to open a specific Zarr version, see

open_optandMetadataRetrieveVersion.

Creating Attributes

Attributes are encoded in a JSON object (serde_json::Object).

Here are a few different approaches for constructing a JSON object:

#![allow(unused)]

fn main() {

let value = serde_json::json!({

"spam": "ham",

"eggs": 42

});

let attributes: serde_json::Map<String, serde_json::Value> =

serde_json::json!(value).as_object().unwrap().clone();

let serde_json::Value::Object(attributes) = value else { unreachable!() };

}#![allow(unused)]

fn main() {

extern crate serde_json;

use serde_json::Value;

let mut attributes = serde_json::Map::default();

attributes.insert("spam".to_string(), Value::String("ham".to_string()));

attributes.insert("eggs".to_string(), Value::Number(42.into()));

}Alternatively, you can encode your attributes in a struct deriving Serialize, and serialize to a serde_json::Object.

Creating a Group with the GroupBuilder

Note

The

GroupBuilderonly supports Zarr V3 groups.

#![allow(unused)]

fn main() {

let store = std::sync::Arc::new(zarrs::storage::store::MemoryStore::new());

let attributes = serde_json::Map::default();

let group = zarrs::group::GroupBuilder::new()

.attributes(attributes)

.build(store.clone(), "/group")?;

group.store_metadata()?;

// group.async_store_metadata().await?;

Ok::<_, Box<dyn std::error::Error>>(())

}Note that the /group path is relative to the root of the store.

Remember to Store Metadata!

Group metadata must always be stored explicitly, even if the attributes are empty. Support for implicit groups without metadata was removed long after provisional acceptance of the Zarr V3 specification.

Tip

Consider deferring storage of group metadata until child group/array operations are complete. The presence of valid metadata can act as a signal that the data is ready.

Creating a Group from GroupMetadata

Zarr V3

#![allow(unused)]

fn main() {

extern crate zarrs;

use zarrs::group::Group;

use zarrs::metadata::{GroupMetadata, v3::GroupMetadataV3};

let store = std::sync::Arc::new(zarrs::storage::store::MemoryStore::new());

let attributes = serde_json::Map::default();

/// Specify the group metadata

let metadata: GroupMetadata =

GroupMetadataV3::new().with_attributes(attributes).into();

/// Create the group and write the metadata

let group =

Group::new_with_metadata(store.clone(), "/group", metadata)?;

group.store_metadata()?;

// group.async_store_metadata().await?;

Ok::<_, Box<dyn std::error::Error>>(())

}#![allow(unused)]

fn main() {

extern crate zarrs;

use zarrs::group::Group;

use zarrs::metadata::{GroupMetadata, v3::GroupMetadataV3};

let store = std::sync::Arc::new(zarrs::storage::store::MemoryStore::new());

/// Specify the group metadata

let metadata: GroupMetadataV3 = serde_json::from_str(

r#"{

"zarr_format": 3,

"node_type": "group",

"attributes": {

"spam": "ham",

"eggs": 42

},

"unknown": {

"must_understand": false

}

}"#,

)?;

/// Create the group and write the metadata

let group =

Group::new_with_metadata(store.clone(), "/group", metadata.into())?;

group.store_metadata()?;

// group.async_store_metadata().await?;

Ok::<_, Box<dyn std::error::Error>>(())

}Zarr V2

#![allow(unused)]

fn main() {

extern crate zarrs;

use zarrs::group::Group;

use zarrs::metadata::{GroupMetadata, v2::GroupMetadataV2};

let attributes = serde_json::Map::default();

let store = std::sync::Arc::new(zarrs::storage::store::MemoryStore::new());

/// Specify the group metadata

let metadata: GroupMetadata =

GroupMetadataV2::new().with_attributes(attributes).into();

/// Create the group and write the metadata

let group = Group::new_with_metadata(store.clone(), "/group", metadata)?;

group.store_metadata()?;

// group.async_store_metadata().await?;

Ok::<_, Box<dyn std::error::Error>>(())

}Mutating Group Metadata

Group attributes can be changed after initialisation with Group::attributes_mut:

#![allow(unused)]

fn main() {

extern crate zarrs;

use zarrs::metadata::{GroupMetadata, v2::GroupMetadataV2};

use zarrs::group::Group;

let store = std::sync::Arc::new(zarrs::storage::store::MemoryStore::new());

let metadata: GroupMetadata = GroupMetadataV2::new().into();

let mut group = Group::new_with_metadata(store.clone(), "/group", metadata)?;

group

.attributes_mut()

.insert("foo".into(), serde_json::Value::String("bar".into()));

group.store_metadata()?;

Ok::<_, Box<dyn std::error::Error>>(())

}Don’t forget to store the updated metadata after attributes have been mutated.

Zarr Arrays

An array is a node in a hierarchy that may not have any child nodes.

An array is a data structure with zero or more dimensions whose lengths define the shape of the array. An array contains zero or more data elements all of the same data type.

The following sections will detail the initialisation, reading, and writing of arrays.

Array Initialisation

Opening an Existing Array

An existing array can be opened with Array::open (or async_open):

#![allow(unused)]

fn main() {

extern crate zarrs;

use zarrs::array::{Array, ArrayBuilder, data_type};

let store = std::sync::Arc::new(zarrs::storage::store::MemoryStore::new());

let array_path = "/group/array";

let array = ArrayBuilder::new(

vec![8, 8], // array shape

vec![4, 4], // regular chunk shape

data_type::float32(),

f32::NAN,

).build(store.clone(), array_path)?;

array.store_metadata()?;

let array = Array::open(store.clone(), array_path)?;

// let array = Array::async_open(store.clone(), array_path).await?;

Ok::<_, Box<dyn std::error::Error>>(())

}Note

These methods will open a Zarr V2 or Zarr V3 array. If you only want to open a specific Zarr version, see

open_optandMetadataRetrieveVersion.

Creating a Zarr V3 Array with the ArrayBuilder

Note

The

ArrayBuilderonly supports Zarr V3 groups.

#![allow(unused)]

fn main() {

extern crate zarrs;

use std::sync::Arc;

use zarrs::array::{Array, ArrayBuilder, data_type};

let store = std::sync::Arc::new(zarrs::storage::store::MemoryStore::new());

let array_path = "/group/array";

let array = ArrayBuilder::new(

vec![8, 8], // array shape

vec![4, 4], // regular chunk shape

data_type::float32(),

f32::NAN,

)

// .bytes_to_bytes_codecs(vec![]) // uncompressed

.bytes_to_bytes_codecs(vec![

Arc::new(zarrs::array::codec::GzipCodec::new(5)?),

])

.dimension_names(["y", "x"].into())

// .attributes(...)

// .storage_transformers(vec![].into())

.build(store.clone(), array_path)?;

array.store_metadata()?;

// array.async_store_metadata().await?;

Ok::<_, Box<dyn std::error::Error>>(())

}ArrayBuilder::new supports more advanced initialisation from metadata, explicit extensions, etc.

Consult the documentation.

Tip

The Group Initialisation Chapter has tips for creating attributes.

Remember to Store Metadata!

Array metadata must always be stored explicitly, otherwise an array cannot be opened.

Tip

Consider deferring storage of array metadata until after chunks operations are complete. The presence of valid metadata can act as a signal that the data is ready.

Creating a Zarr V3 Sharded Array

The ShardingCodecBuilder is useful for creating an array that uses the sharding_indexed codec.

#![allow(unused)]

fn main() {

extern crate zarrs;

use std::sync::Arc;

use std::num::NonZeroU64;

use zarrs::array::{Array, ArrayBuilder, data_type, codec::array_to_bytes::sharding::ShardingCodecBuilder};

let store = std::sync::Arc::new(zarrs::storage::store::MemoryStore::new());

let array_path = "/group/array";

let mut sharding_codec_builder = ShardingCodecBuilder::new(

vec![NonZeroU64::new(2).unwrap(); 2].into(), // inner chunk shape

&data_type::float32(),

);

sharding_codec_builder.bytes_to_bytes_codecs(vec![

Arc::new(zarrs::array::codec::GzipCodec::new(5)?),

]);

let array = ArrayBuilder::new(

vec![8, 8], // array shape

vec![4, 4], // regular chunk shape

data_type::float32(),

f32::NAN,

)

.array_to_bytes_codec(sharding_codec_builder.build_arc())

.build(store.clone(), array_path)?;

array.store_metadata()?;

// array.async_store_metadata().await?;

Ok::<_, Box<dyn std::error::Error>>(())

}Creating a Zarr V3 Array from Metadata

An array can be created from ArrayMetadata instead of an ArrayBuilder if needed.

#![allow(unused)]

fn main() {

extern crate zarrs;

use std::sync::Arc;

use zarrs::array::Array;

use zarrs::metadata::ArrayMetadata;

let store = std::sync::Arc::new(zarrs::storage::store::MemoryStore::new());

let array_path = "/group/array";

let json: &str = r#"{

"zarr_format": 3,

"node_type": "array",

"shape": [

10000,

1000

],

"data_type": "float64",

"chunk_grid": {

"name": "regular",

"configuration": {

"chunk_shape": [

1000,

100

]

}

},

"chunk_key_encoding": {

"name": "default",

"configuration": {

"separator": "/"

}

},

"fill_value": "NaN",

"codecs": [

{

"name": "bytes",

"configuration": {

"endian": "little"

}

},

{

"name": "gzip",

"configuration": {

"level": 1

}

}

],

"attributes": {

"foo": 42,

"bar": "apples",

"baz": [

1,

2,

3,

4

]

},

"dimension_names": [

"rows",

"columns"

]

}"#;

/// Parse the JSON metadata

let array_metadata: ArrayMetadata = serde_json::from_str(json)?;

/// Create the array

let array = Array::new_with_metadata(

store.clone(),

"/group/array",

array_metadata.into(),

)?;

array.store_metadata()?;

// array.async_store_metadata().await?;

Ok::<_, Box<dyn std::error::Error>>(())

}Alternatively, ArrayMetadataV3 can be constructed with ArrayMetadataV3::new() and subsequent with_ methods:

#![allow(unused)]

fn main() {

extern crate zarrs;

use std::sync::Arc;

use zarrs::array::Array;

use zarrs::metadata::{ArrayMetadata, v3::ArrayMetadataV3};

let store = std::sync::Arc::new(zarrs::storage::store::MemoryStore::new());

/// Specify the array metadata

let array_metadata: ArrayMetadata = ArrayMetadataV3::new(

serde_json::from_str("[10, 10]")?,

serde_json::from_str(r#"{"name": "regular", "configuration":{"chunk_shape": [5, 5]}}"#)?,

serde_json::from_str(r#""float32""#)?,

serde_json::from_str("0.0")?,

serde_json::from_str(r#"[ {"name": "bytes", "configuration": { "endian": "little" } }, { "name": "blosc", "configuration": { "cname": "blosclz", "clevel": 9, "shuffle": "bitshuffle", "typesize": 2, "blocksize": 0 } } ]"#)?,

).with_chunk_key_encoding(

serde_json::from_str(r#"{"name": "default", "configuration": {"separator": "/"}}"#)?,

).with_attributes(

serde_json::from_str(r#"{"foo": 42, "bar": "apples", "baz": [1, 2, 3, 4]}"#)?,

)

// .with_dimension_names(

// Some(serde_json::from_str(r#"["y", "x"]"#)?),

// )

.into();

/// Create the array

let array = Array::new_with_metadata(

store.clone(),

"/group/array",

array_metadata,

)?;

array.store_metadata()?;

// array.async_store_metadata().await?;

Ok::<_, Box<dyn std::error::Error>>(())

}Creating a Zarr V2 Array

The ArrayBuilder does not support Zarr V2 arrays.

Instead, they must be built from ArrayMetadataV2.

#![allow(unused)]

fn main() {

extern crate zarrs;

use std::sync::Arc;

use std::num::NonZeroU64;

use zarrs::array::Array;

use zarrs::metadata::{ArrayMetadata, ChunkKeySeparator, v2::ArrayMetadataV2, v2::FillValueMetadataV2, v2::ArrayMetadataV2Order};

let store = std::sync::Arc::new(zarrs::storage::store::MemoryStore::new());

/// Specify the array metadata

let array_metadata: ArrayMetadata = ArrayMetadataV2::new(

vec![10, 10], // array shape

vec![NonZeroU64::new(5).unwrap(); 2].into(), // regular chunk shape

">f4".into(), // big endian float32

FillValueMetadataV2::from(f32::NAN), // fill value

None, // compressor

None, // filters

)

.with_dimension_separator(ChunkKeySeparator::Slash)

.with_order(ArrayMetadataV2Order::F)

.into();

/// Create the array

let array = Array::new_with_metadata(

store.clone(),

"/group/array",

array_metadata,

)?;

array.store_metadata()?;

// array.async_store_metadata().await?;

Ok::<_, Box<dyn std::error::Error>>(())

}Warning

Array::new_with_metadatacan fail if Zarr V2 metadata is unsupported byzarrs.

Mutating Array Metadata

The shape, dimension names, attributes, and additional fields of an array are mutable.

Don’t forget to write the metadata after mutating array metadata!

The next chapters detail the reading and writing of array data.

Reading Arrays

Overview

Array operations are divided into several categories based on the traits implemented for the backing storage.

This section focuses on the [Async]ReadableStorageTraits methods:

retrieve_chunk_if_existsretrieve_chunkretrieve_chunksretrieve_chunk_subsetretrieve_array_subsetretrieve_encoded_chunkpartial_decoder

Additional methods are offered by extension traits:

ArrayShardedExtandArrayShardedReadableExt: see Reading Sharded ArraysArrayChunkCacheExt: see Chunk Caching

Method Variants

Many retrieve and store methods have multiple variants:

_optsuffix variants have aCodecOptionsparameter for fine-grained concurrency control and more.- Variants without the

_optsuffix use defaultCodecOptions. async_prefix variants can be used with async stores (requiresasyncfeature).

All store_* methods can store data implementing [IntoArrayBytes].

All retrieve_* methods can retrieve data implementing [FromArrayBytes].

Array Subsets

An ArraySubset represents a subset (region) of an array or chunk.

It encodes a starting coordinate and a shape, and is foundational for many array operations.

It implements [ArraySubsetTraits] alongside other types, such as &[Range<u64>], [Range<u64>; N], and Vec<Range<u64>>.

All array operations that reference a region of an array or chunk accept any type implementing ArraySubsetTraits.

Reading a Chunk

Reading and Decoding a Chunk

#![allow(unused)]

fn main() {

extern crate zarrs;

extern crate ndarray;

use zarrs::array::{Array, ArrayBuilder, data_type, ArrayBytes};

let store = std::sync::Arc::new(zarrs::storage::store::MemoryStore::new());

let array = ArrayBuilder::new(vec![8, 8], vec![4, 4], data_type::float32(), 0.0f32)

.build(store.clone(), "/array")?;

let chunk_indices: Vec<u64> = vec![1, 2];

let chunk_bytes: ArrayBytes = array.retrieve_chunk(&chunk_indices)?;

let chunk_elements: Vec<f32> = array.retrieve_chunk(&chunk_indices)?;

let chunk_array: ndarray::ArrayD<f32> = array.retrieve_chunk(&chunk_indices)?;

Ok::<_, Box<dyn std::error::Error>>(())

}Warning

_elementand_ndarrayvariants will fail if the element type does not match the array data type. They do not perform any conversion.

Skipping Empty Chunks

Use retrieve_chunk_if_exists to only retrieve a chunk if it exists (i.e. is not composed entirely of the fill value, or has yet to be written to the store):

#![allow(unused)]

fn main() {

extern crate zarrs;

extern crate ndarray;

use zarrs::array::{Array, ArrayBuilder, data_type, ArrayBytes};

let store = std::sync::Arc::new(zarrs::storage::store::MemoryStore::new());

let array = ArrayBuilder::new(vec![8, 8], vec![4, 4], data_type::float32(), 0.0f32)

.build(store.clone(), "/array")?;

let chunk_indices: Vec<u64> = vec![1, 2];

let chunk_bytes: Option<ArrayBytes> =

array.retrieve_chunk_if_exists(&chunk_indices)?;

let chunk_elements: Option<Vec<f32>> =

array.retrieve_chunk_if_exists(&chunk_indices)?;

let chunk_elements: Option<Vec<f32>> =

array.retrieve_chunk_if_exists(&chunk_indices)?;

let chunk_array: Option<ndarray::ArrayD<f32>> =

array.retrieve_chunk_if_exists(&chunk_indices)?;

Ok::<_, Box<dyn std::error::Error>>(())

}Retrieving an Encoded Chunk

An encoded chunk can be retrieved without decoding with retrieve_encoded_chunk:

#![allow(unused)]

fn main() {

extern crate zarrs;

use zarrs::array::{Array, ArrayBuilder, data_type};

let store = std::sync::Arc::new(zarrs::storage::store::MemoryStore::new());

let array = ArrayBuilder::new(vec![8, 8], vec![4, 4], data_type::float32(), 0.0f32)

.build(store.clone(), "/array")?;

let chunk_indices: Vec<u64> = vec![1, 2];

let chunk_bytes_encoded: Option<Vec<u8>> =

array.retrieve_encoded_chunk(&chunk_indices)?;

Ok::<_, Box<dyn std::error::Error>>(())

}This returns None if a chunk does not exist.

Parallelism and Concurrency

Codec and Chunk Parallelism

Codecs run in parallel on a threadpool.

Array store and retrieve methods will also run in parallel when they involve multiple chunks.

zarrs will automatically choose where to prioritise parallelism between codecs/chunks based on the codecs and number of chunks.

By default, all available CPU cores will be used (where possible/efficient).

Concurrency can be limited globally with Config::set_codec_concurrent_target or as required using _opt methods with CodecOptions populated with CodecOptions::set_concurrent_target.

Async API Concurrency

This crate is async runtime-agnostic. Async methods do not spawn tasks internally, so asynchronous storage calls are concurrent but not parallel. Codec encoding and decoding operations still execute in parallel (where supported) in an asynchronous context.

Due the lack of parallelism, methods like async_retrieve_array_subset or async_retrieve_chunks do not parallelise over chunks and can be slow compared to the API.

Parallelism over chunks can be achieved by spawning tasks outside of zarrs.

If executing many tasks concurrently, consider reducing the codec concurrent_target.

Reading Chunks in Parallel

The retrieve_chunks methods perform chunk retrieval with chunk parallelism.

Rather than taking a &[u64] parameter of the indices of a single chunk, these methods take an &dyn ArraySubsetTraits representing the chunks.

Rather than returning a Vec for each chunk, the chunks are assembled into a single output for the entire region they cover:

#![allow(unused)]

fn main() {

extern crate zarrs;

extern crate ndarray;

use zarrs::array::{Array, ArrayBuilder, data_type, ArrayBytes};

let store = std::sync::Arc::new(zarrs::storage::store::MemoryStore::new());

let array = ArrayBuilder::new(vec![8, 8], vec![4, 4], data_type::float32(), 0.0f32)

.build(store.clone(), "/array")?;

let chunks_bytes: ArrayBytes = array.retrieve_chunks(&[0..2, 0..4])?;

let chunks_elements: Vec<f32> = array.retrieve_chunks(&[0..2, 0..4])?;

let chunks_array: ndarray::ArrayD<f32> = array.retrieve_chunks(&[0..2, 0..4])?;

Ok::<_, Box<dyn std::error::Error>>(())

}retrieve_encoded_chunks differs in that it does not assemble the output.

Chunks returned are in order of the chunk indices returned by chunks.indices().into_iter():

#![allow(unused)]

fn main() {

extern crate zarrs;

use zarrs::array::{Array, ArrayBuilder, data_type};

use zarrs::array::CodecOptions;

let store = std::sync::Arc::new(zarrs::storage::store::MemoryStore::new());

let array = ArrayBuilder::new(vec![8, 8], vec![4, 4], data_type::float32(), 0.0f32)

.build(store.clone(), "/array")?;

let chunk_bytes_encoded: Vec<Option<Vec<u8>>> = array.retrieve_encoded_chunks(&[0..2, 0..4], &CodecOptions::default())?;

Ok::<_, Box<dyn std::error::Error>>(())

}Reading a Chunk Subset

The below array subsets are all identical:

#![allow(unused)]

fn main() {

extern crate zarrs;

use zarrs::array::ArraySubset;

let subset = ArraySubset::new_with_ranges(&[2..6, 3..5]);

let subset = ArraySubset::new_with_start_shape(vec![2, 3], vec![4, 2])?;

let subset = ArraySubset::new_with_start_end_exc(vec![2, 3], vec![6, 5])?;

let subset = ArraySubset::new_with_start_end_inc(vec![2, 3], vec![5, 4])?;

Ok::<_, Box<dyn std::error::Error>>(())

}The retrieve_chunk_subset methods can be used to retrieve a subset of a chunk:

#![allow(unused)]

fn main() {

extern crate zarrs;

extern crate ndarray;

use zarrs::array::{Array, ArrayBuilder, data_type, ArrayBytes};

let store = std::sync::Arc::new(zarrs::storage::store::MemoryStore::new());

let array = ArrayBuilder::new(vec![8, 8], vec![4, 4], data_type::float32(), 0.0f32)

.build(store.clone(), "/array")?;

let chunk_indices: Vec<u64> = vec![1, 2];

let chunk_subset_bytes: ArrayBytes = array.retrieve_chunk_subset(&chunk_indices, &[0..2, 0..2])?;

let chunk_subset_elements: Vec<f32> = array.retrieve_chunk_subset(&chunk_indices, &[0..2, 0..2])?;

let chunk_subset_array: ndarray::ArrayD<f32> = array.retrieve_chunk_subset(&chunk_indices, &[0..2, 0..2])?;

Ok::<_, Box<dyn std::error::Error>>(())

}It is important to understand what is going on behind the scenes in these methods. A partial decoder is created that decodes the requested subset.

Warning

Many codecs do not support partial decoding, so partial decoding may result in reading and decoding entire chunks!

Reading Multiple Chunk Subsets

If multiple chunk subsets are needed from a chunk, prefer to create a partial decoder and reuse it for each chunk subset.

#![allow(unused)]

fn main() {

extern crate zarrs;

use zarrs::array::{Array, ArrayBuilder, data_type, ArrayBytes};

use zarrs::array::CodecOptions;

let store = std::sync::Arc::new(zarrs::storage::store::MemoryStore::new());

let array = ArrayBuilder::new(vec![8, 8], vec![4, 4], data_type::float32(), 0.0f32)

.build(store.clone(), "/array")?;

let chunk_indices: Vec<u64> = vec![1, 2];

let partial_decoder = array.partial_decoder(&chunk_indices)?;

let chunk_subsets_bytes_a: ArrayBytes = partial_decoder.partial_decode(&[0..2, 0..2], &CodecOptions::default())?;

let chunk_subsets_bytes_b: ArrayBytes = partial_decoder.partial_decode(&[2..4, 0..2], &CodecOptions::default())?;

let chunk_subsets_bytes_c: ArrayBytes = partial_decoder.partial_decode(&[0..2, 2..4], &CodecOptions::default())?;

Ok::<_, Box<dyn std::error::Error>>(())

}On initialisation, partial decoders may insert a cache (depending on the codecs). For example, if a codec does not support partial decoding, its output (or an output of one of its predecessors in the codec chain) will be cached, and subsequent partial decoding operations will not access the store.

Reading an Array Subset

An arbitrary subset of an array can be read with the retrieve_array_subset methods:

#![allow(unused)]

fn main() {

extern crate zarrs;

extern crate ndarray;

use zarrs::array::{Array, ArrayBuilder, data_type, ArrayBytes};

let store = std::sync::Arc::new(zarrs::storage::store::MemoryStore::new());

let array = ArrayBuilder::new(vec![8, 8], vec![4, 4], data_type::float32(), 0.0f32)

.build(store.clone(), "/array")?;

let subset_bytes: ArrayBytes = array.retrieve_array_subset(&[2..6, 3..5])?;

let subset_elements: Vec<f32> = array.retrieve_array_subset(&[2..6, 3..5])?;

let subset_array: ndarray::ArrayD<f32> = array.retrieve_array_subset(&[2..6, 3..5])?;

Ok::<_, Box<dyn std::error::Error>>(())

}Internally, these methods identify the overlapping chunks, call retrieve_chunk / retrieve_chunk_subset with chunk parallelism, and assemble the output.

Reading Inner Chunks (Sharded Arrays)

The sharding_indexed codec enables multiple sub-chunks (“inner chunks”) to be stored in a single chunk (“shard”).

With a sharded array, the chunk_grid and chunk indices in store/retrieve methods reference the chunks (“shards”) of an array.

The ArrayShardedExt trait provides additional methods to Array to query if an array is sharded and retrieve the inner chunk shape.

Additionally, the inner chunk grid can be queried, which is a ChunkGrid where chunk indices refer to inner chunks rather than shards.

The ArrayShardedReadableExt trait adds Array methods to conveniently and efficiently access the data in a sharded array (with _elements and _ndarray variants):

For unsharded arrays, these methods gracefully fallback to referencing standard chunks.

Each method has a cache parameter (ArrayShardedReadableExtCache) that stores shard indexes so that they do not have to be repeatedly retrieved and decoded.

Querying Chunk Bounds

Several convenience methods are available for querying the underlying chunk grid:

chunk_origin: Get the origin of a chunk.chunk_shape: Get the shape of a chunk.chunk_subset: Get theArraySubsetof a chunk.chunk_subset_bounded: Get theArraySubsetof a chunk, bounded by the array shape.chunks_subset/chunks_subset_bounded: Get theArraySubsetof a group of chunks.chunks_in_array_subset: Get the chunks in anArraySubset.

An ArraySubset spanning an array can be retrieved with subset_all.

Iterating Over Chunks / Regions

Iterating over chunks or regions is a common pattern. There are several approaches.

Serial Chunk Iteration

#![allow(unused)]

fn main() {

extern crate zarrs;

use zarrs::array::ArraySubset;

let chunks = ArraySubset::new_with_ranges(&[0..2, 0..4]);

let indices = chunks.indices();

for chunk_indices in indices {

// Process chunk_indices

}

}Parallel Chunk Iteration

#![allow(unused)]

fn main() {

extern crate zarrs;

extern crate rayon;

use zarrs::array::ArraySubset;

use rayon::iter::{IntoParallelIterator, ParallelIterator};

let chunks = ArraySubset::new_with_ranges(&[0..2, 0..4]);

let indices = chunks.indices();

indices.into_par_iter().try_for_each(|chunk_indices| {

// Process chunk_indices

Ok::<(), zarrs::array::ArrayError>(())

})?;

Ok::<_, Box<dyn std::error::Error>>(())

}Warning

Reading chunks in parallel (as above) can use a lot of memory if chunks are large.

The zarrs crate internally uses a macro from the rayon_iter_concurrent_limit crate to limit chunk parallelism where reasonable.

This macro is a simple wrapper over .into_par_iter().chunks(...).<func>.

For example:

#![allow(unused)]

fn main() {

extern crate zarrs;

extern crate rayon;

extern crate rayon_iter_concurrent_limit;

use zarrs::array::ArraySubset;

use rayon::iter::{IntoParallelIterator, ParallelIterator};

let chunks = ArraySubset::new_with_ranges(&[0..2, 0..4]);

let indices = chunks.indices();

let chunk_concurrent_limit: usize = 4;

rayon_iter_concurrent_limit::iter_concurrent_limit!(

chunk_concurrent_limit,

indices,

try_for_each,

|chunk_indices| {

// Process chunk indices

Ok::<(), zarrs::array::ArrayError>(())

}

)?;

Ok::<_, Box<dyn std::error::Error>>(())

}Chunk Caching

The standard Array retrieve methods do not perform any chunk caching.

This means that requesting the same chunk again will result in another read from the store.

The ArrayChunkCacheExt trait adds Array retrieve methods that support chunk caching.

Various type of chunk caches are supported (e.g. encoded cache, decoded cache, chunk limited, size limited, thread local, etc.).

See the Chunk Caching section of the Array docs for more information on these methods.

Chunk caching is likely to be effective for remote stores where redundant retrievals are costly. However, chunk caching may not outperform disk caching with a filesystem store. The caches use internal locking to support multithreading, which has a performance overhead.

Warning

Prefer not to use a chunk cache if chunks are not accessed repeatedly. Cached retrieve methods do not use partial decoders, and any intersected chunk is fully decoded if not present in the cache.

For many access patterns, chunk caching may reduce performance. Benchmark your algorithm/data.

Reading a String Array

A string array can be read as normal with any of the array retrieve methods.

#![allow(unused)]

fn main() {

extern crate zarrs;

extern crate ndarray;

use zarrs::array::{Array, ArrayBuilder, data_type, FillValue};

let store = std::sync::Arc::new(zarrs::storage::store::MemoryStore::new());

let array = ArrayBuilder::new(

vec![4, 4],

vec![2, 2],

data_type::string(),

FillValue::from(""),

).build(store.clone(), "/array")?;

let chunks_elements: Vec<String> = array.retrieve_chunks(&[0..2, 0..2])?;

let chunks_array: ndarray::ArrayD<String> = array.retrieve_chunks(&[0..2, 0..2])?;

Ok::<_, Box<dyn std::error::Error>>(())

}However, this results in a string allocation per element.

This can be avoided by retrieving the bytes directly and converting them to a Vec of string references.

For example:

#![allow(unused)]

fn main() {

extern crate zarrs;

extern crate ndarray;

extern crate itertools;

use zarrs::array::{Array, ArrayBuilder, data_type, ArrayBytes, FillValue};

use ndarray::ArrayD;

use itertools::Itertools;

let store = std::sync::Arc::new(zarrs::storage::store::MemoryStore::new());

let array = ArrayBuilder::new(

vec![4, 4],

vec![2, 2],

data_type::string(),

FillValue::from(""),

).build(store.clone(), "/array")?;

let chunks_bytes: ArrayBytes = array.retrieve_chunks(&[0..2, 0..2])?;

let (bytes, offsets) = chunks_bytes.into_variable()?.into_parts();

let string = String::from_utf8(bytes.into_owned())?;

let chunks_elements: Vec<&str> = offsets

.iter()

.tuple_windows()

.map(|(&curr, &next)| &string[curr..next])

.collect();

let subset_all = array.subset_all();

let chunks_array =

ArrayD::<&str>::from_shape_vec(subset_all.shape_usize(), chunks_elements)?;

Ok::<_, Box<dyn std::error::Error>>(())

}Writing Arrays

Array write methods are separated based on two storage traits:

[Async]WritableStorageTraitsmethods perform write operations exclusively, and[Async]ReadableWritableStorageTraitsmethods perform write operations and may perform read operations.

Warning

Misuse of

[Async]ReadableWritableStorageTraitsArraymethods can result in data loss due to partial writes being lost.zarrsdoes not currently offer a “synchronisation” API for locking chunks or array subsets.

Write-Only Methods

The [Async]WritableStorageTraits grouped methods exclusively perform write operations:

Store a Chunk

#![allow(unused)]

fn main() {

extern crate zarrs;

extern crate ndarray;

use zarrs::array::{Array, ArrayBytes, ArrayBuilder, data_type};

use ndarray::ArrayD;

let store = std::sync::Arc::new(zarrs::storage::store::MemoryStore::new());

let array = ArrayBuilder::new(vec![8, 8], vec![4, 4], data_type::float32(), 0.0f32)

.build(store.clone(), "/array")?;

let chunk_indices: Vec<u64> = vec![1, 2];

let chunk_bytes: ArrayBytes = vec![0u8; 4 * 4 * 4].into(); // 4x4 chunk of f32

array.store_chunk(&chunk_indices, chunk_bytes)?;

let chunk_elements: Vec<f32> = vec![1.0; 4 * 4];

array.store_chunk(&chunk_indices, &chunk_elements)?;

let chunk_array = ArrayD::<f32>::from_shape_vec(

vec![4, 4], // chunk shape

chunk_elements

)?;

array.store_chunk(&chunk_indices, chunk_array)?;

Ok::<_, Box<dyn std::error::Error>>(())

}Tip

If a chunk is written more than once, its element values depend on whichever operation wrote to the chunk last.

Store Chunks

store_chunks (and variants) will dissasemble the input into chunks, and encode and store them in parallel.

#![allow(unused)]

fn main() {

extern crate zarrs;

use zarrs::array::{Array, ArrayBytes, ArrayBuilder, data_type};

let store = std::sync::Arc::new(zarrs::storage::store::MemoryStore::new());

let array = ArrayBuilder::new(vec![8, 8], vec![4, 4], data_type::float32(), 0.0f32)

.build(store.clone(), "/array")?;

let chunks_bytes: ArrayBytes = vec![0u8; 2 * 2 * 4 * 4 * 4].into(); // 2x2 chunks of 4x4 f32

array.store_chunks(&[0..2, 0..2], chunks_bytes)?;

// store_chunks_elements, store_chunks_ndarray...

Ok::<_, Box<dyn std::error::Error>>(())

}Store an Encoded Chunk

An encoded chunk can be stored directly with store_encoded_chunk, bypassing the zarrs codec pipeline.

#![allow(unused)]

fn main() {

extern crate zarrs;

use zarrs::array::{Array, ArrayBuilder, data_type};

let store = std::sync::Arc::new(zarrs::storage::store::MemoryStore::new());

let array = ArrayBuilder::new(vec![8, 8], vec![4, 4], data_type::float32(), 0.0f32)

.build(store.clone(), "/array")?;

let chunk_indices: Vec<u64> = vec![1, 2];

let encoded_chunk_bytes: Vec<u8> = vec![0u8; 4 * 4 * 4]; // pre-encoded bytes

// SAFETY: the encoded bytes are valid for the chunk (bytes codec only defaulted to native endianness)

unsafe { array.store_encoded_chunk(&chunk_indices, encoded_chunk_bytes.into())? };

Ok::<_, Box<dyn std::error::Error>>(())

}Tip

Currently, the most performant path for uncompressed writing on Linux is to reuse page aligned buffers via

store_encoded_chunkwith direct IO enabled for theFilesystemStore. See zarrs GitHub issue #58 for a discussion of this method.

Read-Write Methods

The [Async]ReadableWritableStorageTraits grouped methods perform write operations and may perform read operations:

These methods perform partial encoding. Codecs that do not support true partial encoding will retrieve chunks in their entirety, then decode, update, and store them.

It is the responsibility of zarrs consumers to ensure:

store_chunk_subsetis not called concurrently on the same chunk, andstore_array_subsetis not called concurrently on array subsets sharing chunks.

Partial writes to a chunk may be lost if these rules are not respected.

Store a Chunk Subset

#![allow(unused)]

fn main() {

extern crate zarrs;

use zarrs::array::{Array, ArrayBuilder, data_type};

let store = std::sync::Arc::new(zarrs::storage::store::MemoryStore::new());

let array = ArrayBuilder::new(vec![16, 8], vec![4, 4], data_type::float32(), 0.0f32)

.build(store.clone(), "/array")?;

array.store_chunk_subset(

// chunk indices

&[3, 1],

// subset within chunk

&[1..2, 0..4],

// subset elements

&[-4.0f32; 4],

)?;

Ok::<_, Box<dyn std::error::Error>>(())

}Store an Array Subset

#![allow(unused)]

fn main() {

extern crate zarrs;

use zarrs::array::{Array, ArrayBuilder, data_type};

let store = std::sync::Arc::new(zarrs::storage::store::MemoryStore::new());

let array = ArrayBuilder::new(vec![8, 8], vec![4, 4], data_type::float32(), 0.0f32)

.build(store.clone(), "/array")?;

array.store_array_subset(&[0..8, 6..7], &[123.0f32; 8])?;

Ok::<_, Box<dyn std::error::Error>>(())

}Partial Encoding with the Sharding Codec

In zarrs, the sharding_indexed codec is the only codec that supports real partial encoding if the Experimental Partial Encoding option is enabled.

If disabled (default), chunks are always fully decoded and updated before being stored.

To enable partial encoding:

#![allow(unused)]

fn main() {

extern crate zarrs;

use zarrs::array::CodecOptions;

// Set experimental_partial_encoding to true by default

zarrs::config::global_config_mut().set_experimental_partial_encoding(true);

// Manually set experimental_partial_encoding to true for an operation

let mut options = CodecOptions::default();

options.set_experimental_partial_encoding(true);

}Warning

The asynchronous API does not yet support partial encoding.

This enables Array::store_array_subset, Array::store_chunk_subset, Array::partial_encoder, and variants to use partial encoding for sharded arrays.

Inner chunks can be written in an append-only fashion without reading previously written inner chunks (if their elements do not require updating).

Warning

Since partial encoding is append-only for sharded arrays, updating a chunk does not remove the originally encoded data. Make sure to align writes to the inner chunks, otherwise your shards will be much larger than they should be.

Converting Zarr V2 to V3

CLI tool

zarrs_reencode is a CLI tool that supports Zarr V2 to V3 conversion. See the zarrs_reencode section.

Changing the Internal Representation

When an array or group is initialised, it internally holds metadata in the Zarr version it was created with.

To change the internal representation to Zarr V3, call to_v3() on an Array or Group, then call store_metadata() to update the stored metadata.

V2 metadata must be explicitly erased if needed (see below).

Note

While

zarrsfully supports manipulation of Zarr V2 and V3 hierarchies (with supported codecs, data types, etc.), it only supports forward conversion of metadata from Zarr V2 to V3.

Convert a Group to V3

#![allow(unused)]

fn main() {

extern crate zarrs;

use zarrs::group::Group;

use zarrs::metadata::{GroupMetadata, v3::GroupMetadataV3};

use zarrs::config::MetadataEraseVersion;

let store = std::sync::Arc::new(zarrs::storage::store::MemoryStore::new());

let group = Group::new_with_metadata(store, "/group", GroupMetadataV3::new().into())?;

let group = group.to_v3();

group.store_metadata()?;

// group.async_store_metadata().await?;

group.erase_metadata_opt(MetadataEraseVersion::V2)?;

// group.async_erase_metadata_opt(MetadataEraseVersion::V2).await?;

Ok::<_, Box<dyn std::error::Error>>(())

}Convert an Array to V3

#![allow(unused)]

fn main() {

extern crate zarrs;

use zarrs::array::{Array, ArrayBuilder, data_type};

use zarrs::config::MetadataEraseVersion;

let store = std::sync::Arc::new(zarrs::storage::store::MemoryStore::new());

let array = ArrayBuilder::new(

vec![8, 8], // array shape

vec![4, 4], // regular chunk shape

data_type::float32(),

f32::NAN,

).build(store.clone(), "/array")?;

let array = array.to_v3()?;

array.store_metadata()?;

// array.async_store_metadata().await?;

array.erase_metadata_opt(MetadataEraseVersion::V2)?;

// array.async_erase_metadata_opt(MetadataEraseVersion::V2).await?;

Ok::<_, Box<dyn std::error::Error>>(())

}Note that Array::to_v3() is fallible because some V2 metadata is not V3 compatible.

Writing Versioned Metadata Explicitly

Rather than changing the internal representation, an alternative is to just write metadata with a specified version.

For groups, the store_metadata_opt accepts a GroupMetadataOptions argument.

GroupMetadataOptions currently has only one option that impacts the Zarr version of the metadata.

By default, GroupMetadataOptions keeps the current Zarr version.

To write Zarr V3 metadata:

#![allow(unused)]

fn main() {

extern crate zarrs;

use zarrs::group::{Group, GroupMetadataOptions};

use zarrs::metadata::{GroupMetadata, v3::GroupMetadataV3};

use zarrs::config::MetadataConvertVersion;

let store = std::sync::Arc::new(zarrs::storage::store::MemoryStore::new());

let group = Group::new_with_metadata(store, "/group", GroupMetadataV3::new().into())?;

group.store_metadata_opt(&

GroupMetadataOptions::default()

.with_metadata_convert_version(MetadataConvertVersion::V3)

)?;

// group.async_store_metadata_opt(...).await?;

Ok::<_, Box<dyn std::error::Error>>(())

}Warning

zarrsdoes not support converting Zarr V3 metadata to Zarr V2.

Note that the original metadata is not automatically deleted. If you want to delete it:

#![allow(unused)]

fn main() {

extern crate zarrs;

use zarrs::group::Group;

use zarrs::metadata::v3::GroupMetadataV3;

use zarrs::config::MetadataEraseVersion;

let store = std::sync::Arc::new(zarrs::storage::store::MemoryStore::new());

let group = Group::new_with_metadata(store, "/group", GroupMetadataV3::new().into())?;

group.erase_metadata_opt(MetadataEraseVersion::V2)?;

// group.async_erase_metadata_opt(MetadataEraseVersion::V2).await?;

Ok::<_, Box<dyn std::error::Error>>(())

}Tip

The

store_metadatamethods ofArrayandGroupinternally callstore_metadata_opt. Global defaults can be changed, see zarrs::global::Config.

ArrayMetadataOptions has similar options for changing the Zarr version of the metadata.

It also has various other configuration options, see its documentation.

Extension Points

The Zarr v3 specification defines several explicit extension points, which are specific components of the Zarr model that can be replaced or augmented with custom implementations:

- Codecs: Codecs define how chunk data is transformed between its in-memory representation and its stored (bytes) representation. Zarr allows chaining multiple codecs, creating sophisticated data transformation pipelines.

- Data types: The element representation of the array. Array-to-array codecs may change the data type of chunk data on encoding, but this is reversed on decoding.

- Chunk Grids: Define how the N-dimensional array space is partitioned into chunks. The specification only defines a

regulargrid, and arectangulargrid is proposed. - Chunk Key Encoding: Specifies how the logical coordinates of a chunk are mapped to the string key used for storage (e.g.,

(0, 1, 0)toc/0/1/0,c.0.1.0,/0/1/0, etc.). The specification definesdefaultandv2encodings. - Storage Transformers: These modify the interaction between the logical Zarr hierarchy (groups, arrays, chunks) and the underlying storage system. The specification does not define nay storage transformers.

- Stores While not strictly an extension point, the ability to interact with different storage backends is a crucial aspect of Zarr’s flexibility.

The

zarrslibrary, like other Zarr implementations, leverages this by providing its own Storage API for reading, writing, and listing data.zarrscan work with virtually any storage system – in-memory buffers, local filesystems, object stores (like S3 or GCS), databases, etc.

Data Type Extensions

According to the Zarr V3 specification:

A data type defines the set of possible values that an array may contain. For example, the 32-bit signed integer data type defines binary representations for all integers in the range −2,147,483,648 to 2,147,483,647.

The specification defines a limited set of data types, but additional data types can be defined as extensions.

zarrs supports a number of extension data types, many of which are registered in the zarr-extensions repository.

This chapter explains how to create custom data types with a guided walkthrough.

Implementing a Custom Data Type

To open/read/write/create a Zarr Array with a custom data type, the following traits must be implemented:

Core Traits

| Trait | Crate | Required | Description |

|---|---|---|---|

DataTypeTraits | zarrs_data_type | Yes | Core data type properties (size, fill value handling, etc.) |

DataTypeTraitsV3 | zarrs_data_type | For V3 | Creation from Zarr V3 metadata (for plugin registration) |

DataTypeTraitsV2 | zarrs_data_type | For V2 | Creation from Zarr V2 metadata (for plugin registration) |

ExtensionAliases<ZarrVersion3> | zarrs_plugin | For V3 | Defines the extension name and aliases for Zarr V3 metadata |

ExtensionAliases<ZarrVersion2> | zarrs_plugin | For V2 | Defines the extension name and aliases for Zarr V2 metadata |

Additionally, the data type must be registered as a DataTypePluginV3 and/or DataTypePluginV2 using inventory.

Codec Traits

The following traits enable compatibility with specific codecs.

See the zarrs_data_type::codec_traits module for convenience macros.

| Trait | Crate | Required | Description |

|---|---|---|---|

BytesDataTypeTraits | zarrs_data_type | For bytes codec | Endianness handling for the bytes codec |

PackBitsDataTypeTraits | zarrs_data_type | For packbits codec | Bit packing/unpacking for the packbits codec |

BitroundDataTypeTraits | zarrs_data_type | For bitround codec | Bit rounding for floating-point types |

FixedScaleOffsetDataTypeTraits | zarrs_data_type | For fixedscaleoffset codec | Fixed scale/offset encoding |

PcodecDataTypeTraits | zarrs_data_type | For pcodec codec | Pcodec compression |

ZfpDataTypeTraits | zarrs_data_type | For zfp codec | ZFP compression for floating-point types |

Element Traits

These traits are implemented on the in-memory representation of the data type (e.g., a newtype wrapper like UInt10DataTypeElement), not on the data type struct itself.

Alternatively, existing Element implementations for standard numeric types (e.g., u16) can be used by setting compatible_element_types in DataTypeTraits.

| Trait | Crate | Required | Description |

|---|---|---|---|

Element | zarrs | For Array store methods | Conversion from in-memory elements to array bytes |

ElementOwned | zarrs | For Array retrieve methods | Conversion from array bytes to in-memory elements |

Example: The uint10 Data Type

This example demonstrates how to implement a hypothetical uint10 data type as a custom extension.

A 10-bit unsigned integer can represent values in the range [0, 1023] and can be supported by the bytes and packbits codecs.

The uint10 data type has no configuration, so it can be represented by a unit struct:

#![allow(unused)]

fn main() {

/// The `uint10` data type.

#[derive(Debug, Clone, Copy)]

struct UInt10DataType;

}Extension Name/Alias Registration

In zarrs 0.23.0, extension names and aliases are managed through the zarrs_plugin::ExtensionAliases<V> trait.

The zarrs_plugin::impl_extension_aliases! macro provides a convenient way to implement these traits:

At compile time, extensions are registered (via inventory) so that can be identified when opening an array and created.

Extensions are registered for usage with Zarr V3 metadata and/or V2 if applicable.

Note that some extensions types (e.g. chunk grids) are not supported all in Zarr V2.

For example:

extern crate inventory;

extern crate zarrs;

extern crate zarrs_plugin;

extern crate zarrs_data_type;

extern crate zarrs_metadata;

use std::sync::Arc;

use zarrs_metadata::v3::MetadataV3;

#[derive(Debug)]

struct UInt10DataType;

zarrs_plugin::impl_extension_aliases!(UInt10DataType, v3: "uint10");

inventory::submit! {

zarrs_data_type::DataTypePluginV3::new::<UInt10DataType>(|_: &MetadataV3| Ok(Arc::new(UInt10DataType).into()))

}Implementing DataTypeTraits

To be used as a data type extension, UInt10DataType must implement the DataTypeTraits trait (from zarrs_data_type).

This defines properties of the data type, such as conversion to/from fill value metadata, size (fixed or variable), and a method to .

#![allow(unused)]

fn main() {

extern crate zarrs_data_type;

extern crate zarrs_metadata;

extern crate zarrs_plugin;

use std::convert::TryInto;

use std::sync::Arc;

use std::any::Any;

use zarrs_data_type::{DataTypeTraits, DataTypeTraitsV3, DataTypeFillValueMetadataError, DataTypeFillValueError, FillValue};

use zarrs_metadata::{Configuration, DataTypeSize, FillValueMetadata, v3::MetadataV3};

use zarrs_plugin::PluginCreateError;

// Define a marker struct for the data type

// A custom data type could also be a struct holding configuration parameters

#[derive(Debug)]

struct UInt10DataType;

// Register the data type

zarrs_plugin::impl_extension_aliases!(UInt10DataType, v3: "uint10");

impl DataTypeTraitsV3 for UInt10DataType {

fn create(_metadata: &MetadataV3) -> Result<zarrs_data_type::DataType, PluginCreateError> {

// NOTE: Should validate that metadata is empty / missing

Ok(Arc::new(UInt10DataType).into())

}

}

inventory::submit! {

zarrs_data_type::DataTypePluginV3::new::<UInt10DataType>()

}

impl DataTypeTraits for UInt10DataType {

fn configuration(&self, _zarr_version: zarrs_plugin::ZarrVersion) -> Configuration {

Configuration::default()

}

fn size(&self) -> DataTypeSize {

DataTypeSize::Fixed(2)

}

fn fill_value(

&self,

fill_value_metadata: &FillValueMetadata,

_version: zarrs_plugin::ZarrVersion,

) -> Result<FillValue, DataTypeFillValueMetadataError> {

let int = fill_value_metadata.as_u64().ok_or(DataTypeFillValueMetadataError)?;

// uint10 range: 0 to 1023

if int > 1023 {

return Err(DataTypeFillValueMetadataError);

}

#[expect(clippy::cast_possible_truncation)]

Ok(FillValue::from(int as u16))

}

fn metadata_fill_value(

&self,

fill_value: &FillValue,

) -> Result<FillValueMetadata, DataTypeFillValueError> {

let bytes: [u8; 2] = fill_value.as_ne_bytes().try_into().map_err(|_| DataTypeFillValueError)?;

let number = u16::from_ne_bytes(bytes);

Ok(FillValueMetadata::from(number))

}

fn compatible_element_types(&self) -> &'static [std::any::TypeId] {

// Declare compatibility with u16 for Element trait implementations

const TYPES: [std::any::TypeId; 1] = [std::any::TypeId::of::<u16>()];

&TYPES

}

fn as_any(&self) -> &dyn Any {

self

}

}

}Implementing BytesDataTypeTraits

Supporting the bytes codec for the uint10 data type requires handling endianness conversion.

The impl_bytes_data_type_traits! macro from zarrs_data_type provides a convenient implementation that reverses byte order if necessary (depending on system endianness and the bytes codec endianness configuration):

#![allow(unused)]

fn main() {

extern crate zarrs_data_type;

extern crate zarrs_plugin;

#[derive(Debug)]

struct UInt10DataType;

zarrs_data_type::codec_traits::impl_bytes_data_type_traits!(UInt10DataType, 2);

}The arguments are:

- Data type struct

- Fixed size in bytes (2 for uint10)

Implementing PackBitsDataTypeTraits

The uint10 data type supports the packbits codec as a 10-bit value.

The impl_pack_bits_data_type_traits! macro from zarrs_data_type provides a convenient implementation:

#![allow(unused)]

fn main() {

extern crate zarrs_data_type;

extern crate zarrs_plugin;

#[derive(Debug)]

struct UInt10DataType;

zarrs_data_type::codec_traits::impl_pack_bits_data_type_traits!(UInt10DataType, 10, unsigned, 1);

}The arguments are:

- Data type struct

- Bits per component (10 for uint10)

- Sign type (

unsignedorsigned) - Number of components (1 for scalar types)

Using Existing Element Implementations

zarrs provides Element and ElementOwned implementations for standard numeric types like u16.

To enable these existing implementations for a custom data type, override the compatible_element_types method in DataTypeTraits to return the compatible Rust types.

For uint10, we declared compatibility with u16 above:

fn compatible_element_types(&self) -> &'static [std::any::TypeId] {

const TYPES: [std::any::TypeId; 1] = [std::any::TypeId::of::<u16>()];

&TYPES

}This allows using u16 directly with Array::store_* and Array::retrieve_* methods without implementing custom Element traits.

Note that this does NOT enforce that values are in the valid uint10 range of [0, 1023], so a custom type is recommended for stricter type safety.

Custom Element Implementations

For custom data types where existing Element implementations are not suitable, you can define a newtype wrapper and implement Element and ElementOwned manually.

For example, here is how uint10 could be supported as a newtype wrapper around u16:

#![allow(unused)]

fn main() {

/// The in-memory representation of the `uint10` data type.

#[derive(Clone, Copy, Debug, PartialEq)]

struct UInt10DataTypeElement(u16);

}A custom element type must implement the Element trait to be used in Array::store_* methods with Vec or ndarray inputs.

use zarrs::array::{ArrayBytes, ArrayError, DataType, Element};

impl Element for UInt10DataTypeElement {

fn validate_data_type(data_type: &DataType) -> Result<(), ArrayError> {

data_type

.is::<UInt10DataType>()

.then_some(())

.ok_or(ArrayError::IncompatibleElementType)

}

fn to_array_bytes<'a>(

data_type: &DataType,

elements: &'a [Self],

) -> Result<ArrayBytes<'a>, ArrayError> {

Self::validate_data_type(data_type)?;

// Validate all elements are in the valid uint10 range

for element in elements {

if element.0 > 1023 {

return Err(ArrayError::InvalidDataValue);

}

}

// Convert to native endian bytes

let bytes: Vec<u8> = elements

.iter()

.flat_map(|e| e.0.to_ne_bytes())

.collect();

Ok(ArrayBytes::from(bytes))

}

fn into_array_bytes(

data_type: &DataType,

elements: Vec<Self>,

) -> Result<ArrayBytes<'static>, ArrayError> {

Self::to_array_bytes(data_type, &elements)

}

}A custom element type must implement the ElementOwned trait to be used in Array::retrieve_* methods with Vec or ndarray outputs.

use zarrs::array::{ArrayBytes, ArrayError, DataType, ElementOwned};

impl ElementOwned for UInt10DataTypeElement {

fn from_array_bytes(

data_type: &DataType,

bytes: ArrayBytes<'_>,

) -> Result<Vec<Self>, ArrayError> {

Self::validate_data_type(data_type)?;

let bytes = bytes.into_fixed()?;

if bytes.len() % 2 != 0 {

return Err(ArrayError::InvalidDataValue);

}

let elements: Vec<Self> = bytes

.as_chunks::<2>()

.0

.map(|chunk| {

let value = u16::from_ne_bytes(chunk);

UInt10DataTypeElement(value)

})

.collect();

for element in &elements {

if element.0 > 1023 {

return Err(ArrayError::InvalidDataValue);

}

}

Ok(elements)

}

}More Examples

The zarrs repository includes multiple custom data type examples:

- custom_data_type_uint12.rs

- custom_data_type_uint4.rs

- custom_data_type_float8_e3m4.rs

- custom_data_type_fixed_size.rs

- custom_data_type_variable_size.rs

Contributing New Data Types to zarrs

The zarr-extensions repository is always growing with new Zarr extensions.

The conformance of zarrs to zarr-extensions is tracked in this issue:

Contributions are welcomed to support additional data types.

Codec Extensions

Among the most impactful and frequently utilised extension points are codecs. At their core, codecs define the transformations applied to array chunk data as it moves between its logical, in-memory representation and its serialized, stored representation as a sequence of bytes.

Codecs are the workhorses behind essential Zarr features like compression (reducing storage size and transfer time) and filtering (rearranging or modifying data to improve compression effectiveness). The Zarr v3 specification allows for a pipeline of codecs to be defined for each array, where the output of one codec becomes the input for the next during encoding, and the process is reversed during decoding.

Types of Codecs

The Zarr v3 specification categorizes codecs based on the type of data they operate on and produce:

-

Array-to-Array (A->A) Codecs: These codecs transform an array chunk before it is serialized into bytes. They operate on an in-memory array representation and produce another in-memory array representation. Examples could include codecs that transpose data within a chunk, change the data type (e.g.,

float32tofloat16), or apply operations that make an array more amenable to compression. -

Array-to-Bytes (A->B) Codecs: This type of codec handles the crucial step of converting an in-memory array chunk into a sequence of bytes. This typically involves flattening the multidimensional array data and handling endianness conversions if necessary. Every codec pipeline must include at least one

A->Bcodec. -

Bytes-to-Bytes (B->B) Codecs: These codecs take a sequence of bytes as input and produce a sequence of bytes as output. This is the category where most common compression algorithms (like

blosc,zstd,gzip) and byte-level filters (likeshufflefor improving compressibility, or checksums) reside. MultipleB->Bcodecs can be chained together.

Codecs in zarrs

The zarrs library mirrors these conceptual types using a set of Rust traits. To implement a custom codec, you must implement the following traits depending on the codec type:

A->A:CodecTraits+ArrayCodecTraits+ArrayToArrayCodecTraitsA->B:CodecTraits+ArrayCodecTraits+ArrayToBytesCodecTraitsB->B:CodecTraits+BytesToBytesCodecTraits

The traits are:

CodecTraits: Defines the codecconfigurationcreation method, the uniquezarrscodecidentifier, and capabilities related to partial decoding/encoding.ArrayCodecTraits: defines therecommended_concurrencyandpartial_decode_granularity.ArrayToArrayCodecTraits/ArrayToBytesCodecTraits/BytesToBytesCodecTraits: Defines the codecencodeanddecodemethods (including partial encoding and decoding), as well as methods for querying the encoded representation.

These traits define the necessary encode and decode methods (complete and partial), methods for inspecting the encoded chunk representation, hints for concurrent processing, and more.

The best way to learn to implement a new codec is to look at the existing codecs implemented in zarrs.

Example: An LZ4 Bytes-to-Bytes Codec

LZ4 is common lossless compression algorithm.

Let’s implement the numcodecs.lz4 codec, which is supported by zarr-python 3.0.0+ for Zarr V3 data.

The Lz4CodecConfiguration Struct

Looking at the docs for the numcodecs LZ4 codec, it has a single "acceleration" parameter.

The valid range for "accleration" is not documented, but the LZ4 library itself will clamp the acceleration between 1 and the maximum supported compression level.

So, any i32 can be permitted here and there is no need follow New Type Idiom.

The expected form of the codec in array metadata is:

[

...

{

"name": "numcodecs.lz4",

"configuration": {

"acceleration": 1

}

}

...

]

The configuration can be represented by a simple struct:

#![allow(unused)]

fn main() {

extern crate serde;

extern crate derive_more;

/// `lz4` codec configuration parameters

#[derive(serde::Serialize, serde::Deserialize, Clone, Eq, PartialEq, Debug, derive_more::Display)]

#[display("{}", serde_json::to_string(self).unwrap_or_default())]

pub struct Lz4CodecConfiguration {

pub acceleration: i32

}

}Note that codec configurations in zarrs_metadata are versioned so that they can adapt to potential codec specification revisions.

The Lz4Codec Struct

Now create the codec struct.

For encoding, the acceleration needs to be known, so this must be a field of the struct:

#![allow(unused)]

fn main() {

#[derive(serde::Serialize, serde::Deserialize, Clone, Eq, PartialEq, Debug, derive_more::Display)]

#[display("{}", serde_json::to_string(self).unwrap_or_default())]

pub struct Lz4CodecConfiguration { pub acceleration: i32 }

/// An `lz4` codec implementation.

#[derive(Clone, Debug)]

pub struct Lz4Codec {

acceleration: i32

}

impl Lz4Codec {

/// Create a new `lz4` codec.

#[must_use]

pub fn new(acceleration: i32) -> Self {

Self { acceleration }

}

/// Create a new `lz4` codec from configuration.

#[must_use]

pub fn new_with_configuration(configuration: &Lz4CodecConfiguration) -> Self {

Self { acceleration: configuration.acceleration }

}

}

}ExtensionIdentifier and ExtensionAliases Traits

In zarrs 0.23.0, codec identity and aliasing are managed through the ExtensionIdentifier and ExtensionAliases<V> traits.

The impl_extension_aliases! macro from zarrs_plugin (zarrs::plugin) provides a convenient way to implement these traits:

#![allow(unused)]

fn main() {

extern crate zarrs;

pub struct Lz4Codec { acceleration: i32 }

zarrs_plugin::impl_extension_aliases!(Lz4Codec, v3: "example.lz4");

}This macro generates implementations for both ExtensionIdentifier (providing the IDENTIFIER constant) and ExtensionAliases for Zarr V2 and V3.

The generated ExtensionIdentifier::IDENTIFIER constant can be used throughout your codec implementation.

For more complex aliasing needs (e.g., different aliases for V2 vs V3, or regex patterns), the macro supports additional forms:

// V3 aliases only

zarrs_plugin::impl_extension_aliases!(Lz4Codec, v3: "example.lz4", ["numcodecs.lz4"]);

// V2 and V3 aliases

zarrs_plugin::impl_extension_aliases!(Lz4Codec, "example.lz4",

v3: "example.lz4", ["numcodecs.lz4"],

v2: "example.lz4", ["lz4"]

);CodecTraits

Now we implement the CodecTraits, which are required for every codec.

use std::any::Any;

use zarrs::array::codec::{

CodecTraits, CodecMetadataOptions, PartialDecoderCapability, PartialEncoderCapability

};